Published

- 3 min read

Voice AI Technology in 2025: How AI Assistants Transform Speech to Conversation

Ever wonder what happens when you ask your smart speaker a question? Let’s look at the journey from your words to the AI’s answer. It’s changing how we talk to machines in 2025.

How Voice AI Systems Work

Today’s voice AI works in two main ways.

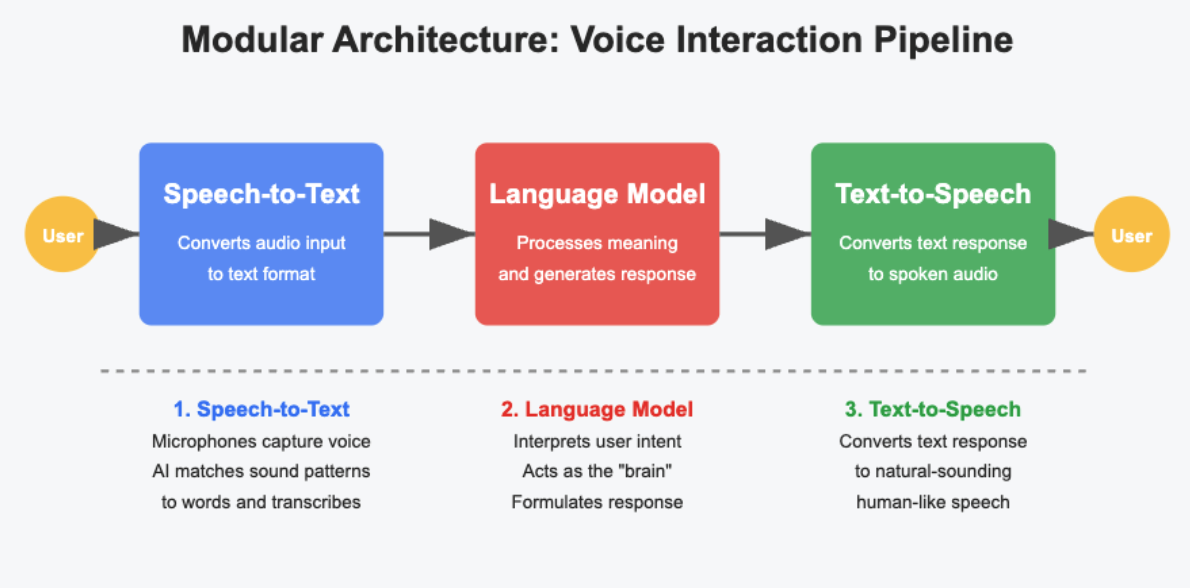

Modular Architecture: The Pipeline

This breaks voice interactions into parts that work together:

- Speech-to-Text: Your voice is picked up by microphones and turned into text. AI systems match sound patterns to the words you say.

- Language Model Processing: The text goes to a Large Language Model that figures out what you mean and creates a response. This is the “brain” of the system.

- Text-to-Speech: The AI’s answer is turned back into speech that sounds human-like.

Unified Architecture: The Direct Method

Created in late 2024 with OpenAI’s Realtime API, this newer approach:

- A single AI agent handles everything from speech input to speech output in one step. This makes conversations flow better with less delay.

How Your Voice Becomes an Answer

When you speak to a voice assistant, here’s what happens:

-

Voice Capture Your device’s microphones record your voice in tiny chunks (10–20 milliseconds). These sound bits are turned into patterns that show your unique voice.

-

Speech Recognition The system filters out background noise and figures out what you said. Modern systems can handle different accents and speaking styles.

-

Understanding Language Once your speech becomes text, the AI:

- Breaks down your sentence

- Picks out key info (names, dates, places)

- Figures out what you want

- Senses how you feel

-

Remembering Context The AI keeps track of your conversation history. This lets you ask follow-up questions without repeating yourself.

-

Creating Responses The AI creates answers based on your question and your chat history. Many systems use outside sources to give accurate info.

-

Making Speech Text-to-Speech turns the response into natural-sounding speech with the right pauses and tone.

Sesame’s CSM: The New Voice AI

Sesame AI’s Conversational Speech Model (CSM) powers their popular voice assistant Maya. Unlike older systems, CSM handles text and audio together.

This system uses two neural networks:

- A backbone model that handles understanding and context

- A decoder model that creates natural-sounding speech

This design helps Maya keep track of conversations and speak in a more natural way. In March 2025, Sesame shared their base model with everyone, helping more people build on this tech.

Challenges for Voice AI

Despite big steps forward, voice AI still faces some problems:

- Accuracy Issues: Background noise, accents, and dialects can still cause trouble. About 73% of users say accuracy is the biggest barrier.

- Understanding Context: Keeping track of longer conversations is still hard for many systems.

- Privacy Concerns: Voice data contains personal info. Many people worry about devices that might always be listening.

- Special Terms: Technical words in fields like healthcare and law are hard for voice systems to understand.

How Voice AI Is Being Used

Voice AI is changing many industries:

- Customer Service: AI voice agents handle customer questions, cutting wait times.

- Healthcare: Voice AI helps with appointments, medication reminders, and medical notes.

- Smart Homes: Voice commands control lights, temperature, security, and entertainment.

As voice AI keeps getting better, the line between human and AI speech is fading. What once struggled with simple commands now handles complex conversations that feel natural—just the start of a new era in how we talk with machines.